React + three.js 3D模型面部表情控制

系列文章目录

- React 使用 three.js 加载 gltf 3D模型 | three.js 入门

- React + three.js 3D模型骨骼绑定

- React + three.js 3D模型面部表情控制

- React + three.js 实现人脸动捕与3D模型表情同步

- 结合 react-webcam、three.js 与 electron 实现桌面人脸动捕应用

示例项目(github):https://github.com/couchette/simple-react-three-facial-expression-demo

示例项目(gitcode):https://gitcode.com/qq_41456316/simple-react-three-facial-expression-demo

前言

在本系列的上一篇文章中,我们已经探讨了如何在 React 中利用 three.js 来渲染 3D 模型,现在,我们将深入研究如何运用 three.js 控制这些模型的表情。让我们一同探索如何赋予你的 3D 模型更加生动和丰富的表情吧!

一、实现步骤

1、创建项目配置环境

使用 create-reacte-app 创建项目

npx create-react-app simple-react-three-facial-expression-demo

cd simple-react-three-facial-expression-demo

安装three.js

npm i three

2. 创建组件

在src目录创建components文件夹,在components文件夹下面创建ThreeContainer.js文件。

首先创建组件,并获取return 元素的ref

import * as THREE from "three";

import { useRef, useEffect } from "react";

function ThreeContainer() {

const containerRef = useRef(null);

const isContainerRunning = useRef(false);

return <div ref={containerRef} />;

}

export default ThreeContainer;

接着将three.js自动创建渲染元素添加到return组件中为子元素(可见container.appendChild(renderer.domElement);),相关逻辑代码在useEffect中执行,完整代码内容如下

import * as THREE from "three";

import WebGPU from "three/addons/capabilities/WebGPU.js";

import WebGL from "three/addons/capabilities/WebGL.js";

import WebGPURenderer from "three/addons/renderers/webgpu/WebGPURenderer.js";

import Stats from "three/addons/libs/stats.module.js";

import { OrbitControls } from "three/addons/controls/OrbitControls.js";

import { GLTFLoader } from "three/addons/loaders/GLTFLoader.js";

import { KTX2Loader } from "three/addons/loaders/KTX2Loader.js";

import { MeshoptDecoder } from "three/addons/libs/meshopt_decoder.module.js";

import { GUI } from "three/addons/libs/lil-gui.module.min.js";

import { useRef, useEffect } from "react";

function ThreeContainer() {

const containerRef = useRef(null);

const isContainerRunning = useRef(false);

useEffect(() => {

if (!isContainerRunning.current && containerRef.current) {

isContainerRunning.current = true;

init();

}

async function init() {

if (

WebGPU.isAvailable() === false &&

WebGL.isWebGL2Available() === false

) {

containerRef.current.appendChild(WebGPU.getErrorMessage());

throw new Error("No WebGPU or WebGL2 support");

}

let mixer;

const clock = new THREE.Clock();

const container = containerRef.current;

const camera = new THREE.PerspectiveCamera(

45,

window.innerWidth / window.innerHeight,

1,

20

);

camera.position.set(-1.8, 0.8, 3);

const scene = new THREE.Scene();

scene.add(new THREE.HemisphereLight(0xffffff, 0x443333, 2));

const renderer = new WebGPURenderer({ antialias: true });

renderer.setPixelRatio(window.devicePixelRatio);

renderer.setSize(window.innerWidth, window.innerHeight);

renderer.toneMapping = THREE.ACESFilmicToneMapping;

renderer.setAnimationLoop(animate);

container.appendChild(renderer.domElement);

const ktx2Loader = await new KTX2Loader()

.setTranscoderPath("/basis/")

.detectSupportAsync(renderer);

new GLTFLoader()

.setKTX2Loader(ktx2Loader)

.setMeshoptDecoder(MeshoptDecoder)

.load("models/facecap.glb", (gltf) => {

const mesh = gltf.scene.children[0];

scene.add(mesh);

mixer = new THREE.AnimationMixer(mesh);

mixer.clipAction(gltf.animations[0]).play();

// GUI

const head = mesh.getObjectByName("mesh_2");

const influences = head.morphTargetInfluences;

//head.morphTargetInfluences = null;

// WebGPURenderer: Unsupported texture format. 33776

head.material.map = null;

const gui = new GUI();

gui.close();

for (const [key, value] of Object.entries(

head.morphTargetDictionary

)) {

gui

.add(influences, value, 0, 1, 0.01)

.name(key.replace("blendShape1.", ""))

.listen();

}

});

scene.background = new THREE.Color(0x666666);

const controls = new OrbitControls(camera, renderer.domElement);

controls.enableDamping = true;

controls.minDistance = 2.5;

controls.maxDistance = 5;

controls.minAzimuthAngle = -Math.PI / 2;

controls.maxAzimuthAngle = Math.PI / 2;

controls.maxPolarAngle = Math.PI / 1.8;

controls.target.set(0, 0.15, -0.2);

const stats = new Stats();

container.appendChild(stats.dom);

function animate() {

const delta = clock.getDelta();

if (mixer) {

mixer.update(delta);

}

renderer.render(scene, camera);

controls.update();

stats.update();

}

window.addEventListener("resize", () => {

camera.aspect = window.innerWidth / window.innerHeight;

camera.updateProjectionMatrix();

renderer.setSize(window.innerWidth, window.innerHeight);

});

}

}, []);

return <div ref={containerRef} />;

}

export default ThreeContainer;

3. 使用组件

修改App.js的内容如下

import "./App.css";

import ThreeContainer from "./components/ThreeContainer";

function App() {

return (

<div>

<ThreeContainer />

</div>

);

}

export default App;

4. 运行项目

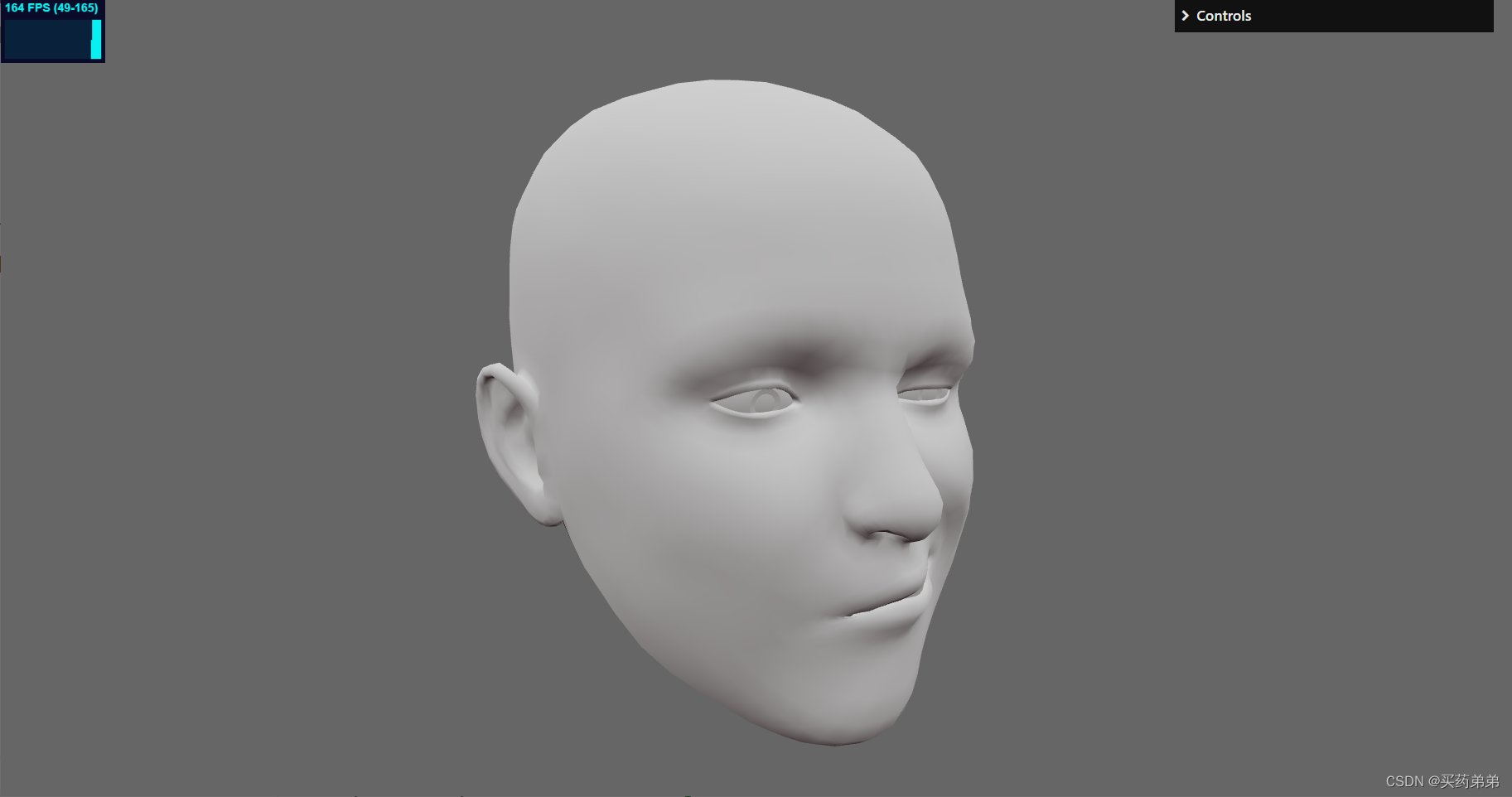

运行项目npm start最终效果如下

总结

通过本文的介绍,相信读者对于在 React 中实现 3D 模型表情控制有了初步的了解。如果你对此感兴趣,不妨动手尝试一下,可能会有意想不到的收获。同时,也欢迎大家多多探索,将 React 和 Three.js 的强大功能发挥到极致,为网页应用增添更多的乐趣和惊喜。

程序预览

原文地址:https://blog.csdn.net/qq_41456316/article/details/137648626

免责声明:本站文章内容转载自网络资源,如本站内容侵犯了原著者的合法权益,可联系本站删除。更多内容请关注自学内容网(zxcms.com)!