第⑮讲:Ceph集群管理与监控操作指南

文章目录

1.查看集群的状态信息

通过集群状态信息可以看到集群的健康状态、各个组件的运行状态以及数据使用情况。

查看集群状态有两种方式:

- 使用ceph命令加参数的方式-s或者status

[root@ceph-node-1 ~]# ceph -s

cluster:

id: a5ec192a-8d13-4624-b253-5b350a616041

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-node-1,ceph-node-2,ceph-node-3 (age 2d)

mgr: ceph-node-1(active, since 2d), standbys: ceph-node-2, ceph-node-3

mds: cephfs-storage:1 {0=ceph-node-2=up:active} 2 up:standby

osd: 7 osds: 7 up (since 95m), 7 in (since 2d); 1 remapped pgs

rgw: 1 daemon active (ceph-node-1)

task status:

data:

pools: 9 pools, 240 pgs

objects: 275 objects, 588 KiB

usage: 7.6 GiB used, 62 GiB / 70 GiB avail

pgs: 239 active+clean

1 active+clean+remapped

- 使用ceph交互式界面查看状态

[root@ceph-node-1 ~]# ceph

ceph> status

cluster:

id: a5ec192a-8d13-4624-b253-5b350a616041

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-node-1,ceph-node-2,ceph-node-3 (age 2d)

mgr: ceph-node-1(active, since 2d), standbys: ceph-node-2, ceph-node-3

mds: cephfs-storage:1 {0=ceph-node-2=up:active} 2 up:standby

osd: 7 osds: 7 up (since 95m), 7 in (since 2d); 1 remapped pgs

rgw: 1 daemon active (ceph-node-1)

task status:

data:

pools: 9 pools, 240 pgs

objects: 275 objects, 588 KiB

usage: 7.6 GiB used, 62 GiB / 70 GiB avail

pgs: 239 active+clean

1 active+clean+remapped

2.动态的查看集群的状态信息

类似于tail命令,持续变化刷新集群的状态信息。

[root@ceph-node-1 ~]# ceph -w

cluster:

id: a5ec192a-8d13-4624-b253-5b350a616041

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-node-1,ceph-node-2,ceph-node-3 (age 2d)

mgr: ceph-node-1(active, since 2d), standbys: ceph-node-2, ceph-node-3

mds: cephfs-storage:1 {0=ceph-node-2=up:active} 2 up:standby

osd: 7 osds: 7 up (since 99m), 7 in (since 2d); 1 remapped pgs

rgw: 1 daemon active (ceph-node-1)

task status:

data:

pools: 9 pools, 240 pgs

objects: 275 objects, 588 KiB

usage: 7.6 GiB used, 62 GiB / 70 GiB avail

pgs: 239 active+clean

1 active+clean+remapped

3.查看集群的利用率

通过ceph df命令可以看到集群的资源利用率,会显示出Ceph集群的总空间容量、剩余容量、使用的容量,还会显示出集群中Pool资源池的利用率。

[root@ceph-node-1 ~]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 70 GiB 62 GiB 644 MiB 7.6 GiB 10.90

TOTAL 70 GiB 62 GiB 644 MiB 7.6 GiB 10.90

POOLS:

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

ceph-rbd-data 1 16 19 B 3 192 KiB 0 20 GiB

.rgw.root 2 32 1.2 KiB 4 768 KiB 0 20 GiB

default.rgw.control 3 32 0 B 8 0 B 0 20 GiB

default.rgw.meta 4 32 3.4 KiB 19 3 MiB 0 20 GiB

default.rgw.log 5 32 0 B 207 0 B 0 20 GiB

default.rgw.buckets.index 6 32 0 B 3 0 B 0 20 GiB

default.rgw.buckets.data 7 32 157 KiB 8 1.9 MiB 0 20 GiB

cephfs_metadata 8 16 426 KiB 23 2.8 MiB 0 20 GiB

cephfs_data 9 16 0 B 0 0 B 0 20 GiB

4.查看OSD的资源利用率

通过ceph osd df命令可以查看集群中每个OSD的资源利用率,包括使用率和可用空间的大小,以及OSD的状态,通过此命令可以观察OSD的空间剩余,如果OSD的空间不足,也会导致集群出现问题,当OSD空间不足时,可以扩容OSD或者删除上层文件来解决。

[root@ceph-node-1 ~]# ceph osd df

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS

0 hdd 0.00980 1.00000 10 GiB 1.1 GiB 92 MiB 23 KiB 1024 MiB 8.9 GiB 10.90 1.00 79 up

3 hdd 0.00980 1.00000 10 GiB 1.1 GiB 92 MiB 20 KiB 1024 MiB 8.9 GiB 10.91 1.00 93 up

6 hdd 0.00980 1.00000 10 GiB 1.1 GiB 91 MiB 20 KiB 1024 MiB 8.9 GiB 10.89 1.00 68 up

1 hdd 0.00980 1.00000 10 GiB 1.1 GiB 92 MiB 0 B 1 GiB 8.9 GiB 10.90 1.00 74 up

4 hdd 0.00980 1.00000 10 GiB 1.1 GiB 92 MiB 0 B 1 GiB 8.9 GiB 10.90 1.00 74 up

7 hdd 0.00980 1.00000 10 GiB 1.1 GiB 91 MiB 0 B 1 GiB 8.9 GiB 10.90 1.00 92 up

2 hdd 0.00980 1.00000 10 GiB 1.1 GiB 94 MiB 31 KiB 1024 MiB 8.9 GiB 10.92 1.00 240 up

TOTAL 70 GiB 7.6 GiB 644 MiB 95 KiB 7.0 GiB 62 GiB 10.90

5.查看OSD的列表

[root@ceph-node-1 ~]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.06857 root default

-3 0.02939 host ceph-node-1

0 hdd 0.00980 osd.0 up 1.00000 1.00000

3 hdd 0.00980 osd.3 up 1.00000 1.00000

6 hdd 0.00980 osd.6 up 1.00000 1.00000

-5 0.02939 host ceph-node-2

1 hdd 0.00980 osd.1 up 1.00000 1.00000

4 hdd 0.00980 osd.4 up 1.00000 1.00000

7 hdd 0.00980 osd.7 up 1.00000 1.00000

-7 0.00980 host ceph-node-3

2 hdd 0.00980 osd.2 up 1.00000 1.00000

6.查看各组件的状态

查看组件的状态:

命令格式ceph {组件} stat

查看组件的详细信息:

命令格式ceph {组件} dump

[root@ceph-node-1 ~]# ceph mon stat

e1: 3 mons at {ceph-node-1=[v2:192.168.20.20:3300/0,v1:192.168.20.20:6789/0],ceph-node-2=[v2:192.168.20.21:3300/0,v1:192.168.20.21:6789/0],ceph-node-3=[v2:192.168.20.22:3300/0,v1:192.168.20.22:6789/0]}, election epoch 44, leader 0 ceph-node-1, quorum 0,1,2 ceph-node-1,ceph-node-2,ceph-node-3

[root@ceph-node-1 ~]# ceph mon dump

epoch 1

fsid a5ec192a-8d13-4624-b253-5b350a616041

last_changed 2022-04-02 22:09:57.238072

created 2022-04-02 22:09:57.238072

min_mon_release 14 (nautilus)

0: [v2:192.168.20.20:3300/0,v1:192.168.20.20:6789/0] mon.ceph-node-1

1: [v2:192.168.20.21:3300/0,v1:192.168.20.21:6789/0] mon.ceph-node-2

2: [v2:192.168.20.22:3300/0,v1:192.168.20.22:6789/0] mon.ceph-node-3

7.查看集群的仲裁信息

[root@ceph-node-1 ~]# ceph quorum_status

{"election_epoch":44,"quorum":[0,1,2],"quorum_names":["ceph-node-1","ceph-node-2","ceph-node-3"],"quorum_leader_name":"ceph-node-1","quorum_age":193280,"monmap":{"epoch":1,"fsid":"a5ec192a-8d13-4624-b253-5b350a616041","modified":"2022-04-02 22:09:57.238072","created":"2022-04-02 22:09:57.238072","min_mon_release":14,"min_mon_release_name":"nautilus","features":{"persistent":["kraken","luminous","mimic","osdmap-prune","nautilus"],"optional":[]},"mons":[{"rank":0,"name":"ceph-node-1","public_addrs":{"addrvec":[{"type":"v2","addr":"192.168.20.20:3300","nonce":0},{"type":"v1","addr":"192.168.20.20:6789","nonce":0}]},"addr":"192.168.20.20:6789/0","public_addr":"192.168.20.20:6789/0"},{"rank":1,"name":"ceph-node-2","public_addrs":{"addrvec":[{"type":"v2","addr":"192.168.20.21:3300","nonce":0},{"type":"v1","addr":"192.168.20.21:6789","nonce":0}]},"addr":"192.168.20.21:6789/0","public_addr":"192.168.20.21:6789/0"},{"rank":2,"name":"ceph-node-3","public_addrs":{"addrvec":[{"type":"v2","addr":"192.168.20.22:3300","nonce":0},{"type":"v1","addr":"192.168.20.22:6789","nonce":0}]},"addr":"192.168.20.22:6789/0","public_addr":"192.168.20.22:6789/0"}]}}

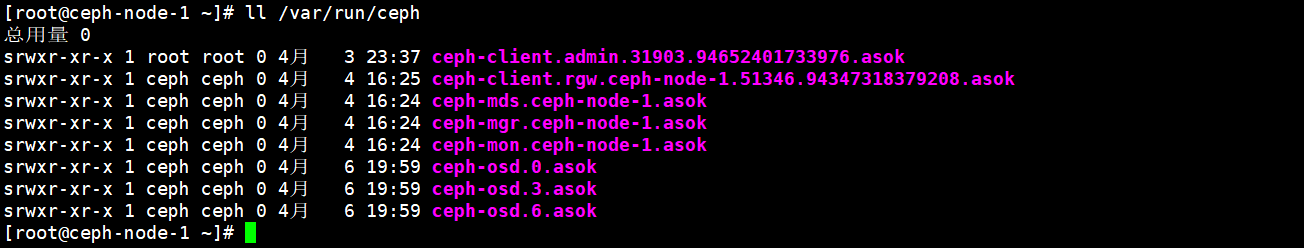

8.查看/修改集群组件sock的配置参数

查看组件的配置信息:

命令格式:ceph --admin-daemon {sock文件路径} config show

设置组件sock的配置参数:

命令格式:ceph --admin-daemon {sock文件路径} config set 参数 值

查看组件sock的配置参数:

命令格式:ceph --admin-daemon {sock文件路径} config get参数

集群各组件的sock文件都位于/var/run/ceph路径。

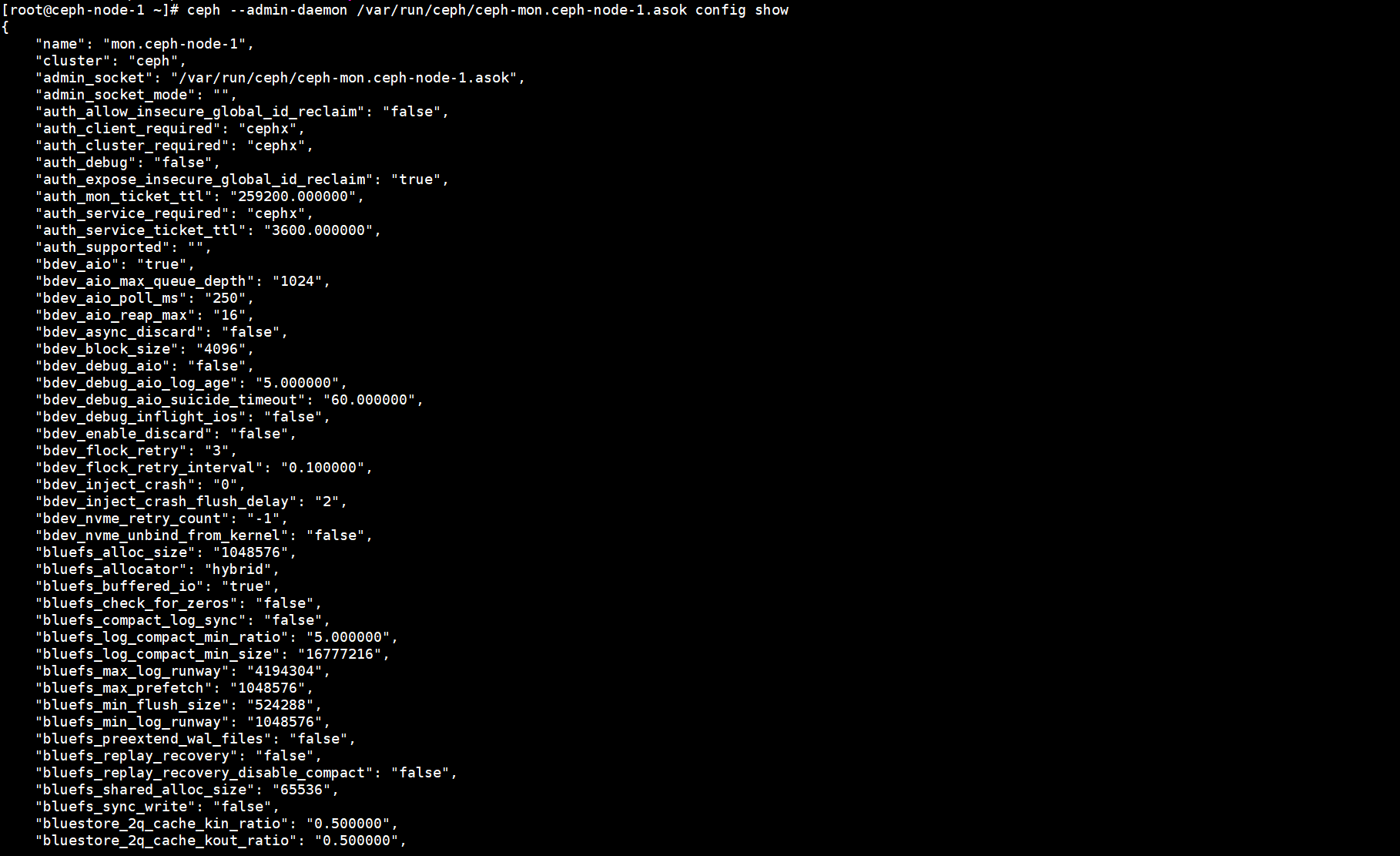

1)查看集群组件的配置信息

[root@ceph-node-1 ~]# ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-node-1.asok config show

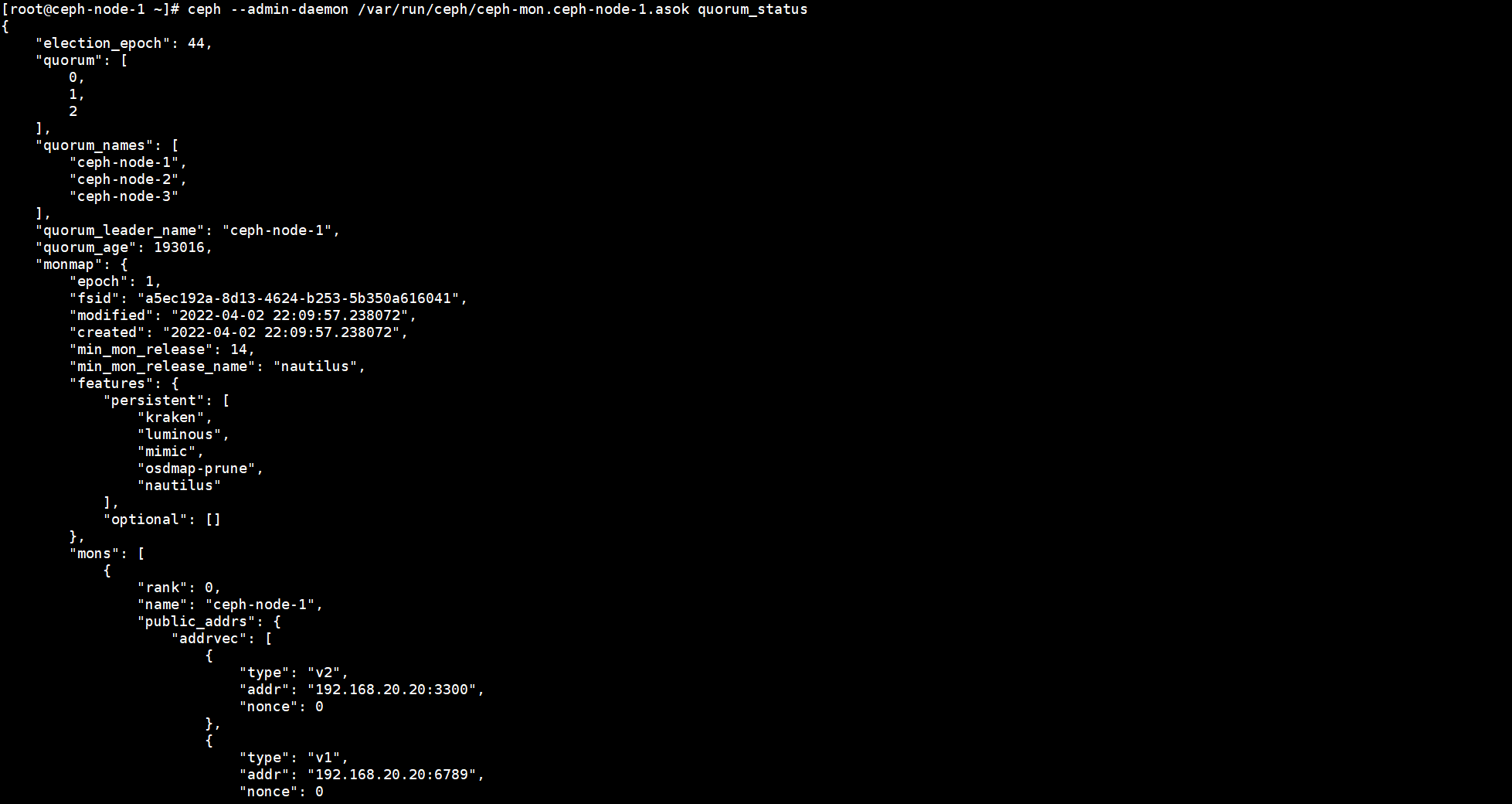

2)查看mon组件的仲裁选举信息

[root@ceph-node-1 ~]# ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-node-1.asok quorum_status

3)调整mon组件的配置参数

[root@ceph-node-1 ~]# ceph --admin-daemon /var/run/ceph/ceph-mon.ceph-node-1.asok config set mon_clock_drift_allowed 1.0

{

"success": "mon_clock_drift_allowed = '1.000000' (not observed, change may require restart) "

}

原文地址:https://blog.csdn.net/weixin_44953658/article/details/137855872

免责声明:本站文章内容转载自网络资源,如本站内容侵犯了原著者的合法权益,可联系本站删除。更多内容请关注自学内容网(zxcms.com)!