HuggingFacePipeline 和 gradio 以及 chatglm3-6b的使用

from langchain_community.llms import HuggingFacePipeline

from langchain.prompts import PromptTemplate

import gradio as gr

hf = HuggingFacePipeline.from_model_id(

model_id="THUDM/chatglm3-6b",

task="text-generation",

device=0,

model_kwargs={"trust_remote_code":True},

pipeline_kwargs={"max_new_tokens": 500},

)

template = """{question}"""

prompt = PromptTemplate.from_template(template)

chain = prompt | hf

def greet2(name):

response = chain.invoke({"question": name})

return response

def alternatingly_agree(message, history):

return greet2(message)

gr.ChatInterface(alternatingly_agree).launch()

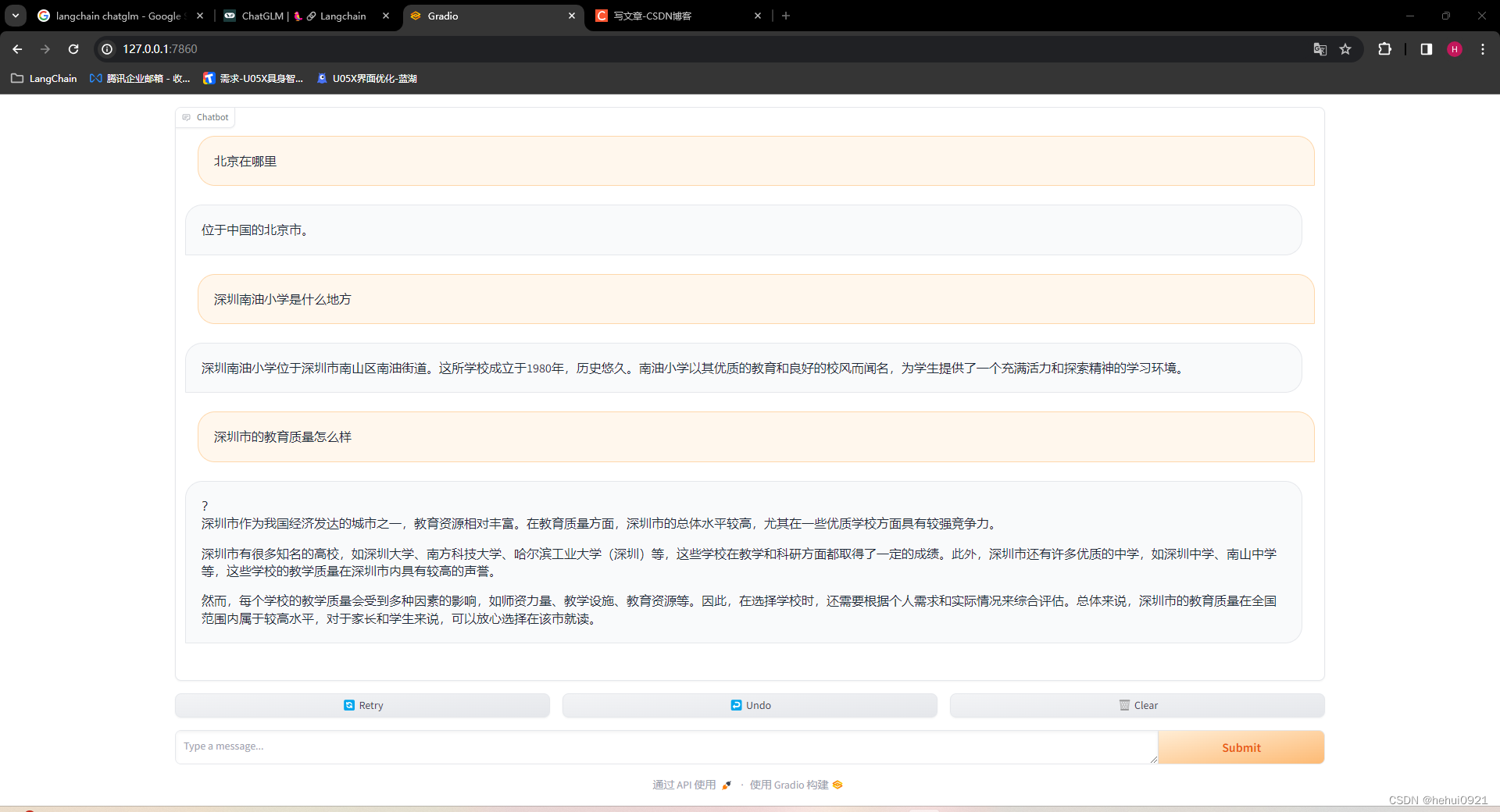

最终效果:

原文地址:https://blog.csdn.net/oHeHui1/article/details/136411756

免责声明:本站文章内容转载自网络资源,如本站内容侵犯了原著者的合法权益,可联系本站删除。更多内容请关注自学内容网(zxcms.com)!