python数据分析pyecharts【饼状图、直方图、词云、地图】

目录

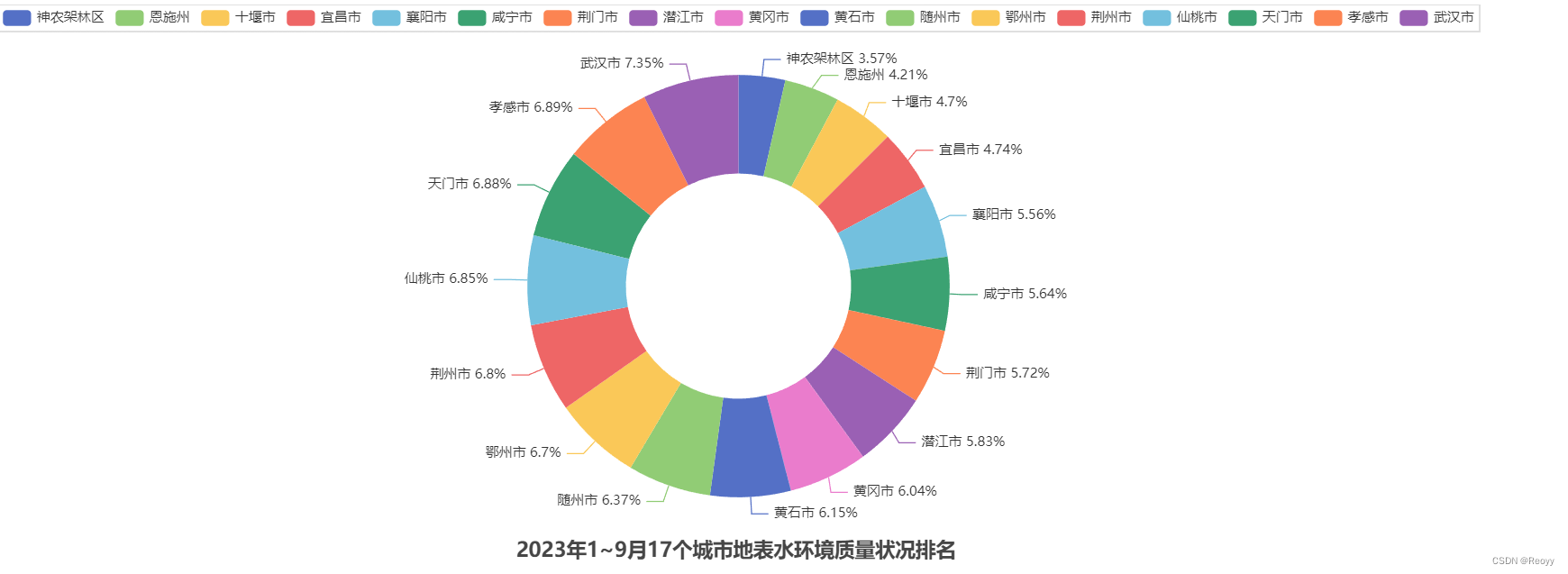

饼状图

from pyecharts.charts import Pie

from pyecharts import options as opts

data = {'神农架林区': 2.6016,

'恩施州': 3.0729,

'十堰市': 3.4300,

'宜昌市': 3.4555,

'襄阳市': 4.0543,

'咸宁市': 4.1145,

'荆门市': 4.1777,

'潜江市': 4.2574,

'黄冈市': 4.4093,

'黄石市': 4.4914,

'随州市': 4.6480,

'鄂州市': 4.8873,

'荆州市': 4.9619,

'仙桃市': 5.0019,

'天门市': 5.0204,

'孝感市': 5.0245,

'武汉市': 5.3657}

x_data = [i for i in data.keys()]

y_data = [i for i in data.values()]

pie = Pie()

pie.width = "1500px"

pie.add("", [list(z) for z in zip(x_data, y_data)], radius=["40%", "75%"])

pie.set_global_opts(

title_opts=opts.TitleOpts(title="2023年1~9月17个城市地表水环境质量状况排名", pos_bottom="0px", pos_left="36.5%"))

# b为x轴,c为y轴,d为百分比

pie.set_series_opts(label_opts=opts.LabelOpts(formatter="{b} {d}%"))

pie.render("饼状图.html")

直方图

import pyecharts.options as opts

from pyecharts.charts import Bar

data = {'神农架林区': 2.6016,

'恩施州': 3.0729,

'十堰市': 3.4300,

'宜昌市': 3.4555,

'襄阳市': 4.0543,

'咸宁市': 4.1145,

'荆门市': 4.1777,

'潜江市': 4.2574,

'黄冈市': 4.4093,

'黄石市': 4.4914,

'随州市': 4.6480,

'鄂州市': 4.8873,

'荆州市': 4.9619,

'仙桃市': 5.0019,

'天门市': 5.0204,

'孝感市': 5.0245,

'武汉市': 5.3657}

x_data = [i for i in data.keys()]

y_data = [i for i in data.values()]

bar = Bar()

bar.width = '1500px'

bar.add_yaxis("2023年1~9月17个城市地表水环境质量状况排名", y_data, color='#85C1E9')

bar.add_xaxis(x_data)

# bar.set_global_opts(xaxis_opts=opts.AxisOpts(name="城市"),yaxis_opts=opts.AxisOpts(name="CWQI"))

# bar.set_global_opts(xaxis_opts=opts.AxisOpts(name="城市", axislabel_opts={"rotate":45}),yaxis_opts=opts.AxisOpts(name="CWQI"))

bar.set_global_opts(xaxis_opts=opts.AxisOpts(name="城市", axislabel_opts={"interval": "0"}),

yaxis_opts=opts.AxisOpts(name="CWQI"))

bar.render("直方图.html")

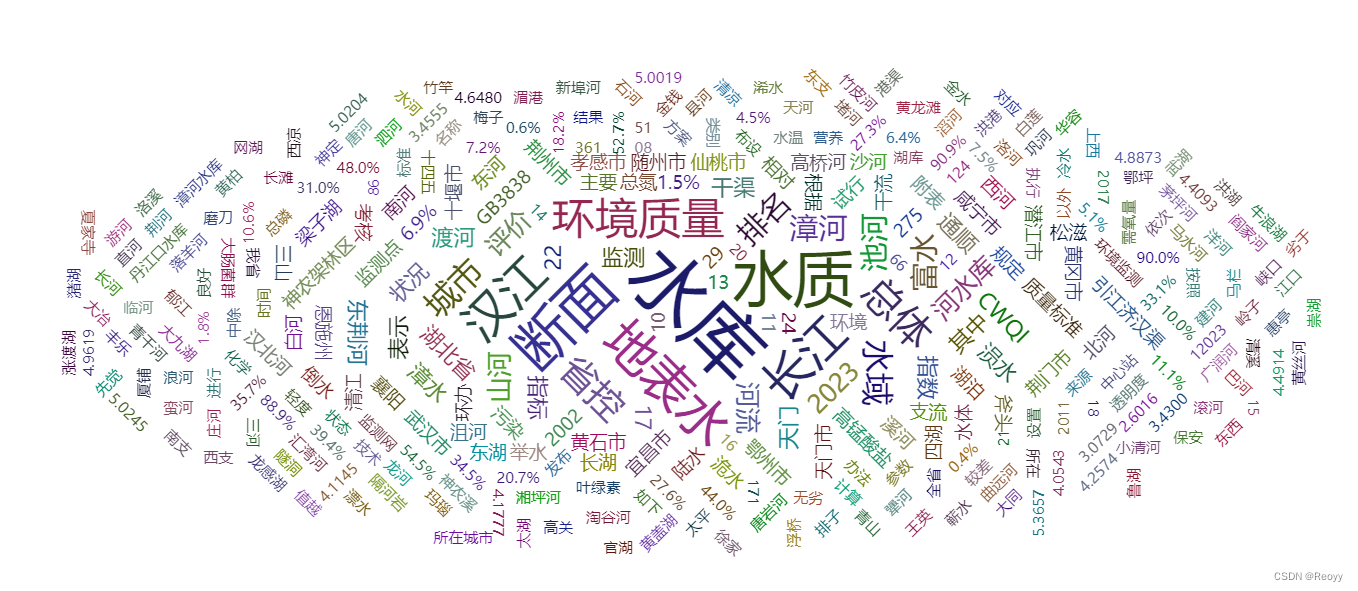

词云

from pyecharts.charts import WordCloud

from collections import Counter

import jieba

# 读取网页并过滤

with open("网页内容.txt", encoding="utf8") as f:

asd = f.read()

# 分词后-》过滤-》计算频率

asds = jieba.lcut(asd)

wordCount = []

for asd in asds:

if (len(asd) > 1):

wordCount.append(asd)

word_counter = Counter(wordCount)

words_list = word_counter.most_common(10000)

print(words_list)

wc = WordCloud()

wc.width = "1500px"

wc.add("", data_pair=words_list)

wc.render("词云.html")

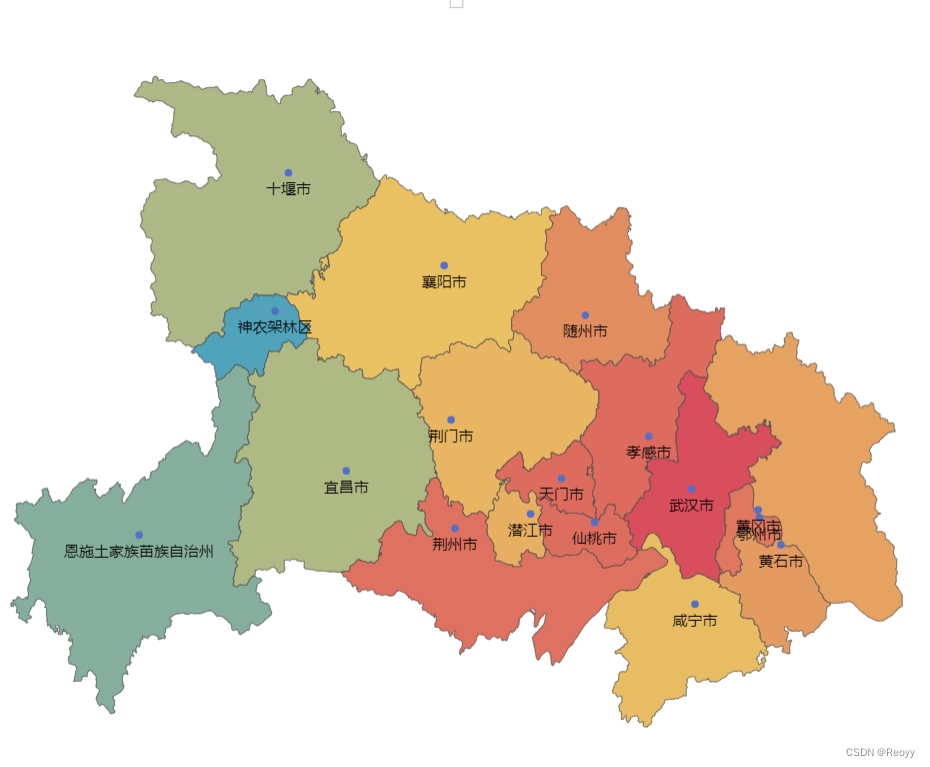

地图

地图

from pyecharts.charts import Map

from pyecharts import options as opts

data = {'神农架林区': 2.6016,

#

'恩施土家族苗族自治州': 3.0729,

'十堰市': 3.4300,

'宜昌市': 3.4555,

'襄阳市': 4.0543,

'咸宁市': 4.1145,

'荆门市': 4.1777,

'潜江市': 4.2574,

'黄冈市': 4.4093,

'黄石市': 4.4914,

'随州市': 4.6480,

'鄂州市': 4.8873,

'荆州市': 4.9619,

'仙桃市': 5.0019,

'天门市': 5.0204,

'孝感市': 5.0245,

'武汉市': 5.3657}

x_data = [i for i in data.keys()]

y_data = [i for i in data.values()]

map=Map()

map.width="1500px"

map.height="650px"

map.add("",[list(z) for z in zip(x_data,y_data)],"湖北")

# map.set_global_opts(visualmap_opts=opts.VisualMapOpts(is_show=True))

map.set_global_opts(visualmap_opts=opts.VisualMapOpts(is_show=True,max_=max(y_data),min_=(min(y_data))))

map.render(path="地图.html")

原文地址:https://blog.csdn.net/QAZWCC/article/details/138009987

免责声明:本站文章内容转载自网络资源,如本站内容侵犯了原著者的合法权益,可联系本站删除。更多内容请关注自学内容网(zxcms.com)!