Block Coordinate Descent算法的部分构造技巧

构造的目的

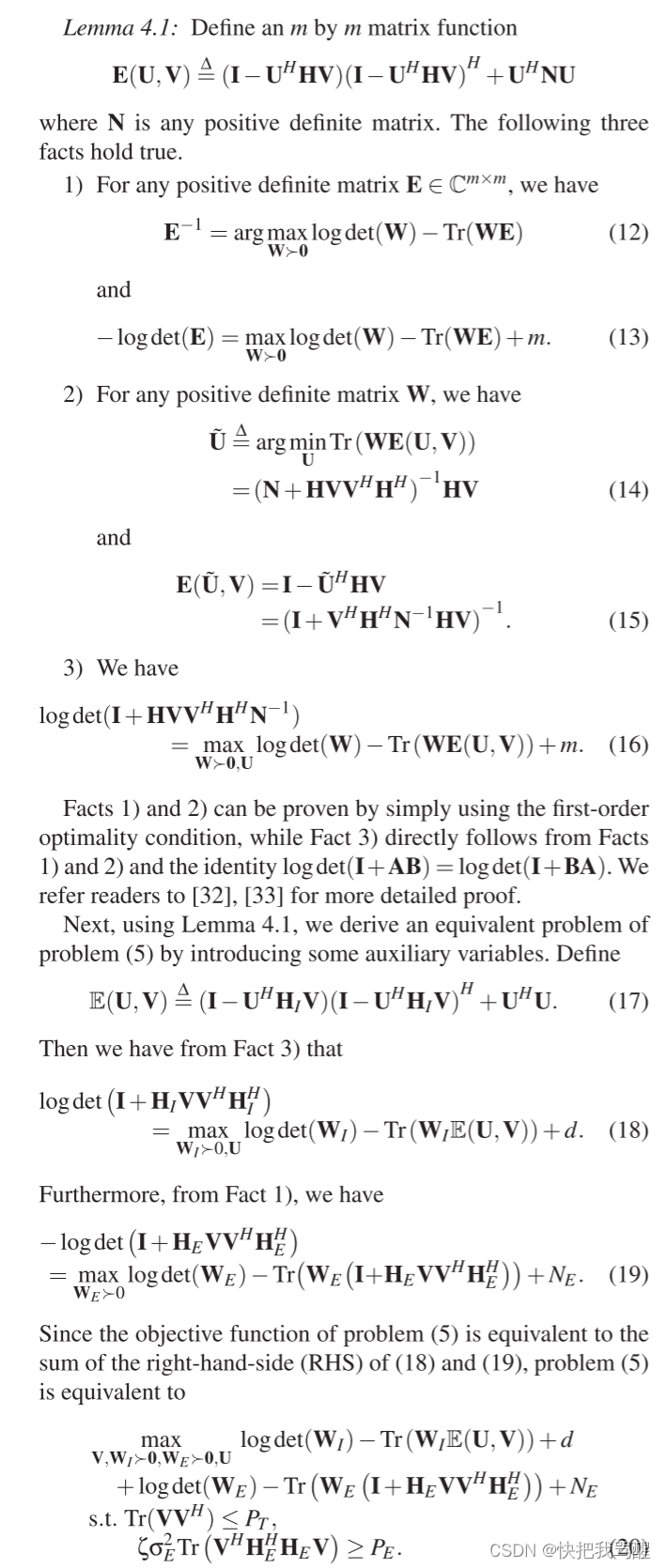

通过增加辅助变量,使原来的非凸问题变为关于各个变量的凸子问题,交替优化各个辅助变量。

定理

Define an

m

m

m by

m

m

m matrix function

E

(

U

,

V

)

≜

(

I

−

U

H

H

V

)

(

I

−

U

H

H

V

)

H

+

U

H

N

U

\mathbf{E}(\mathbf{U}, \mathbf{V}) \triangleq\left(\mathbf{I}-\mathbf{U}^{H} \mathbf{H} \mathbf{V}\right)\left(\mathbf{I}-\mathbf{U}^{H} \mathbf{H} \mathbf{V}\right)^{H}+\mathbf{U}^{H} \mathbf{N} \mathbf{U}

E(U,V)≜(I−UHHV)(I−UHHV)H+UHNU

where

N

\mathbf{N}

N is any positive definite matrix. The following three facts hold true.

-

For any positive definite matrix E ∈ C m × m , we have \text { For any positive definite matrix } \mathbf{E} \in \mathbb{C}^{m \times m} \text {, we have } For any positive definite matrix E∈Cm×m, we have

E − 1 = arg max W ≻ 0 log det ( W ) − Tr ( W E ) \mathbf{E}^{-1}=\arg \max _{\mathbf{W} \succ \mathbf{0}} \log \operatorname{det}(\mathbf{W})-\operatorname{Tr}(\mathbf{W E}) E−1=argW≻0maxlogdet(W)−Tr(WE)

(注:argmax表示找到使某个函数取得最大值的参数值)

and

− log det ( E ) = max W ≻ 0 log det ( W ) − Tr ( W E ) + m -\log \operatorname{det}(\mathbf{E})=\max _{\mathbf{W} \succ \mathbf{0}} \log \operatorname{det}(\mathbf{W})-\operatorname{Tr}(\mathbf{W E})+m −logdet(E)=W≻0maxlogdet(W)−Tr(WE)+m -

For any positive definite matrix W , we have \text { For any positive definite matrix } \mathbf{W} \text {, we have } For any positive definite matrix W, we have

U ~ ≜ arg min U Tr ( W E ( U , V ) ) = ( N + H V V H H H H ) − 1 H V \begin{aligned} \tilde{\mathbf{U}} & \triangleq \arg \min _{\mathbf{U}} \operatorname{Tr}(\mathbf{W E}(\mathbf{U}, \mathbf{V})) \\ & =\left(\mathbf{N}+\mathbf{H V V} \mathbf{H}^{H} \mathbf{H}^{H}\right)^{-1} \mathbf{H V} \end{aligned} U~≜argUminTr(WE(U,V))=(N+HVVHHHH)−1HV

and

E ( U ~ , V ) = I − U ~ H H V = ( I + V H H H N − 1 H V ) − 1 . \begin{aligned} \mathbf{E}(\tilde{\mathbf{U}}, \mathbf{V}) & =\mathbf{I}-\tilde{\mathbf{U}}^{H} \mathbf{H} \mathbf{V} \\ & =\left(\mathbf{I}+\mathbf{V}^{H} \mathbf{H}^{H} \mathbf{N}^{-1} \mathbf{H V}\right)^{-1} . \end{aligned} E(U~,V)=I−U~HHV=(I+VHHHN−1HV)−1.

3) We have

log

det

(

I

+

H

V

V

H

H

H

N

−

1

)

=

max

W

≻

0

,

U

log

det

(

W

)

−

Tr

(

W

E

(

U

,

V

)

)

+

m

\begin{aligned} \log \operatorname{det}(\mathbf{I}+ & \left.\mathbf{H V} \mathbf{V}^{H} \mathbf{H}^{H} \mathbf{N}^{-1}\right) \\ & =\max _{\mathbf{W} \succ \mathbf{0}, \mathbf{U}} \log \operatorname{det}(\mathbf{W})-\operatorname{Tr}(\mathbf{W E}(\mathbf{U}, \mathbf{V}))+m \end{aligned}

logdet(I+HVVHHHN−1)=W≻0,Umaxlogdet(W)−Tr(WE(U,V))+m

Facts 1) and 2) can be proven by simply using the first-order optimality condition, while Fact 3) directly follows from Facts 1) and 2) and the identity log det ( I + A B ) = log det ( I + B A ) \log \operatorname{det}(\mathbf{I}+\mathbf{A B})=\log \operatorname{det}(\mathbf{I}+\mathbf{B A}) logdet(I+AB)=logdet(I+BA) . We refer readers to [32], [33] for more detailed proof.

Next, using Lemma 4.1, we derive an equivalent problem of problem (5) by introducing some auxiliary variables. Define

E

(

U

,

V

)

≜

(

I

−

U

H

H

I

V

)

(

I

−

U

H

H

I

V

)

H

+

U

H

U

.

\mathbb{E}(\mathbf{U}, \mathbf{V}) \triangleq\left(\mathbf{I}-\mathbf{U}^{H} \mathbf{H}_{I} \mathbf{V}\right)\left(\mathbf{I}-\mathbf{U}^{H} \mathbf{H}_{I} \mathbf{V}\right)^{H}+\mathbf{U}^{H} \mathbf{U} .

E(U,V)≜(I−UHHIV)(I−UHHIV)H+UHU.

Then we have from Fact 3) that

log det ( I + H I V V H H I H ) = max W I ≻ 0 , U log det ( W I ) − Tr ( W I E ( U , V ) ) + d \begin{aligned} \log \operatorname{det}(\mathbf{I} & \left.+\mathbf{H}_{I} \mathbf{V} \mathbf{V}^{H} \mathbf{H}_{I}^{H}\right) \\ & =\max _{\mathbf{W}_{I} \succ 0, \mathbf{U}} \log \operatorname{det}\left(\mathbf{W}_{I}\right)-\operatorname{Tr}\left(\mathbf{W}_{I} \mathbb{E}(\mathbf{U}, \mathbf{V})\right)+d \end{aligned} logdet(I+HIVVHHIH)=WI≻0,Umaxlogdet(WI)−Tr(WIE(U,V))+d

Furthermore, from Fact 1), we have

− log det ( I + H E V V H H E H ) = max W E ≻ 0 log det ( W E ) − Tr ( W E ( I + H E V V H H E H ) ) + N E . \begin{array}{l} -\log \operatorname{det}\left(\mathbf{I}+\mathbf{H}_{E} \mathbf{V} \mathbf{V}^{H} \mathbf{H}_{E}^{H}\right) \\ =\max _{\mathbf{W}_{E} \succ 0} \log \operatorname{det}\left(\mathbf{W}_{E}\right)-\operatorname{Tr}\left(\mathbf{W}_{E}\left(\mathbf{I}+\mathbf{H}_{E} \mathbf{V} \mathbf{V}^{H} \mathbf{H}_{E}^{H}\right)\right)+N_{E} . \end{array} −logdet(I+HEVVHHEH)=maxWE≻0logdet(WE)−Tr(WE(I+HEVVHHEH))+NE.

另一篇中对于该定理的表述

Physical Layer Security in Near-Field Communications

该构造与WMMSE算法的关系

考虑一个全数字波束成形优化问题:

max

W

F

D

log

2

∣

I

M

B

+

σ

B

−

2

H

B

W

F

D

W

F

D

H

H

B

H

∣

s.t.

∥

W

F

D

∥

F

2

⩽

P

max

,

∥

H

W

W

F

D

∥

F

2

⩽

p

leak.

.

(26)

\begin{array}{ll} \max _{\mathbf{W}_{\mathrm{FD}}} & \log _{2}\left|\mathbf{I}_{M_{\mathrm{B}}}+\sigma_{\mathrm{B}}^{-2} \mathbf{H}_{\mathrm{B}} \mathbf{W}_{\mathrm{FD}} \mathbf{W}_{\mathrm{FD}}^{\mathrm{H}} \mathbf{H}_{\mathrm{B}}^{\mathrm{H}}\right| \\ \text { s.t. } & \left\|\mathbf{W}_{\mathrm{FD}}\right\|_{\mathrm{F}}^{2} \leqslant P_{\max }, \\ & \left\|\mathbf{H}_{\mathrm{W}} \mathbf{W}_{\mathrm{FD}}\right\|_{\mathrm{F}}^{2} \leqslant p_{\text {leak. }} . \tag{26} \end{array}

maxWFD s.t. log2

IMB+σB−2HBWFDWFDHHBH

∥WFD∥F2⩽Pmax,∥HWWFD∥F2⩽pleak. .(26)

基于 WMMSE 的算法被设计来解决这个子问题。其主要思想是通过利用速率最大化问题和均方误差(MSE)最小化问题之间的等价性,将原始问题转化为更容易处理的形式[38]。

Specifically, the signal vector

s

~

\widetilde{\mathbf{s}}

s

at Bob is estimated by an introduced linear receive beamforming matrix

U

∈

C

M

B

×

L

\mathbf{U} \in \mathbb{C}^{M_{\mathrm{B}} \times L}

U∈CMB×L as

s

~

=

U

H

y

B

\widetilde{\mathbf{s}}=\mathbf{U}^{\mathrm{H}} \mathbf{y}_{\mathrm{B}}

s

=UHyB . Then, the MSE matrix at Bob can be written as

E = E s , n B [ ( s ~ − s ) ( s ~ − s ) H ] = ( I M R − U H H B W F D ) ( I M R − U H H B W F D ) H + σ R 2 U H U . (27) \begin{aligned} \mathbf{E} & =\mathbb{E}_{\mathbf{s}, \mathbf{n}_{\mathrm{B}}}\left[(\widetilde{\mathbf{s}}-\mathbf{s})(\widetilde{\mathbf{s}}-\mathbf{s})^{\mathrm{H}}\right] \\ = & \left(\mathbf{I}_{M_{\mathrm{R}}}-\mathrm{U}^{\mathrm{H}} \mathbf{H}_{\mathrm{B}} \mathbf{W}_{\mathrm{FD}}\right)\left(\mathbf{I}_{M_{\mathrm{R}}}-\mathrm{U}^{\mathrm{H}} \mathrm{H}_{\mathrm{B}} \mathbf{W}_{\mathrm{FD}}\right)^{\mathrm{H}}+\sigma_{\mathrm{R}}^{2} \mathbf{U}^{\mathrm{H}} \mathbf{U} . \end{aligned} \tag{27} E==Es,nB[(s −s)(s −s)H](IMR−UHHBWFD)(IMR−UHHBWFD)H+σR2UHU.(27)

By introducing a weight matrix Ψ ≽ 0 \Psi \succcurlyeq 0 Ψ≽0 for Bob, the subproblem (26) can be equivalently reformulated as [38 , Thm. 1]

min Ψ , U , W F D Tr ( Ψ ) − log 2 ∣ Ψ ∣ s.t. ( 26 b ) , ( 26 c ) . (28) \begin{array}{ll} \min _{\boldsymbol{\Psi}, \mathbf{U}, \mathbf{W}_{\mathrm{FD}}} & \operatorname{Tr}(\boldsymbol{\Psi})-\log _{2}|\boldsymbol{\Psi}| \\ \text { s.t. } & (26 b),(26 \mathrm{c}) . \tag{28} \end{array} minΨ,U,WFD s.t. Tr(Ψ)−log2∣Ψ∣(26b),(26c).(28)

Although the transformed problem has more optimization variables than (26), the objective function in (28) is more tractable. The receive beamforming matrix U \mathbf{U} U and the weight matrix Ψ \Psi Ψ only appear in the objective function (28a). By setting the derivatives of (28a) with respective to U \mathbf{U} U and Ψ \Psi Ψ to zero, respectively, the optimal solutions can be obtained as

U ⋆ = ( H B W F D W F D H H B H + σ B 2 I M B ) − 1 H B W F D , Ψ ⋆ = E − 1 (29) \begin{array}{l} \mathbf{U}^{\star}=\left(\mathbf{H}_{\mathrm{B}} \mathbf{W}_{\mathrm{FD}} \mathbf{W}_{\mathrm{FD}}^{\mathrm{H}} \mathbf{H}_{\mathrm{B}}^{\mathrm{H}}+\sigma_{\mathrm{B}}^{2} \mathbf{I}_{M_{\mathrm{B}}}\right)^{-1} \mathbf{H}_{\mathrm{B}} \mathbf{W}_{\mathrm{FD}}, \\ \mathbf{\Psi}^{\star}=\mathbf{E}^{-1} \tag{29} \end{array} U⋆=(HBWFDWFDHHBH+σB2IMB)−1HBWFD,Ψ⋆=E−1(29)

Substituting the optimal \mathbf{U}^{\star} in (29) into (27) yields the optimal MSE matrix as follows

E ⋆ = I M B − W F D H H B H ( H B W F D W F D H H B H + σ B 2 I M B ) − 1 H B W F D . (30) \begin{array}{l} \mathbf{E}^{\star}= \\ \mathbf{I}_{M_{\mathrm{B}}}-\mathbf{W}_{\mathrm{FD}}^{\mathrm{H}} \mathbf{H}_{\mathrm{B}}^{\mathrm{H}}\left(\mathbf{H}_{\mathrm{B}} \mathbf{W}_{\mathrm{FD}} \mathbf{W}_{\mathrm{FD}}^{\mathrm{H}} \mathbf{H}_{\mathrm{B}}^{\mathrm{H}}+\sigma_{\mathrm{B}}^{2} \mathbf{I}_{M_{\mathrm{B}}}\right)^{-1} \mathbf{H}_{\mathrm{B}} \mathbf{W}_{\mathrm{FD}} . \tag{30} \end{array} E⋆=IMB−WFDHHBH(HBWFDWFDHHBH+σB2IMB)−1HBWFD.(30)

Substituting (27) into the objective function of (28) and discarding the constant terms, the problem that updates the full-digital beamforming matrix \mathbf{W}_{\mathrm{FD}} is transformed as

min W F D Tr ( W F D H H B H U Ψ U H H B W F D ) − Tr ( Ψ W F D H H B H U ) − Tr ( Ψ U H H B W F D ) s.t. ( 26 b ) , ( 26 c ) . \begin{array}{ll} \min _{\mathbf{W}_{\mathrm{FD}}} & \operatorname{Tr}\left(\mathbf{W}_{\mathrm{FD}}^{\mathrm{H}} \mathbf{H}_{\mathrm{B}}^{\mathrm{H}} \mathbf{U} \boldsymbol{\Psi} \mathbf{U}^{\mathrm{H}} \mathbf{H}_{\mathrm{B}} \mathbf{W}_{\mathrm{FD}}\right)-\operatorname{Tr}\left(\boldsymbol{\Psi} \mathbf{W}_{\mathrm{FD}}^{\mathrm{H}} \mathbf{H}_{\mathrm{B}}^{\mathrm{H}} \mathbf{U}\right) \\ & -\operatorname{Tr}\left(\boldsymbol{\Psi} \mathbf{U}^{\mathrm{H}} \mathbf{H}_{\mathrm{B}} \mathbf{W}_{\mathrm{FD}}\right) \\ \text { s.t. } & (26 \mathrm{~b}),(26 \mathrm{c}) . \end{array} minWFD s.t. Tr(WFDHHBHUΨUHHBWFD)−Tr(ΨWFDHHBHU)−Tr(ΨUHHBWFD)(26 b),(26c).

WMMSE与BCD构造的对比辨析

WMMSE变换后的表达式(27)与 E ( U , V ) \mathbf{E}(\mathbf{U}, \mathbf{V}) E(U,V)极为相似,

出处

https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7018097 lemma 4.1

原文地址:https://blog.csdn.net/qq_45542321/article/details/136234652

免责声明:本站文章内容转载自网络资源,如本站内容侵犯了原著者的合法权益,可联系本站删除。更多内容请关注自学内容网(zxcms.com)!