lvs+keepalived+nginx双主模式双主热备实现负载均衡

目录

| 主机名 | IP |

| nginx01 | 11.0.1.31 |

| nginx01 | 11.0.1.31 |

| lvs01 | 11.0.1.33 |

| lvs02 | 11.0.1.34 |

| VIP1 | 11.0.1.29 |

| VIP2 | 11.0.1.30 |

一、原理

lvs+keepalived+nginx主备模式下,lvs永远只有一台在工作,资源利用率并不高,双主模式可以解决这一问题。

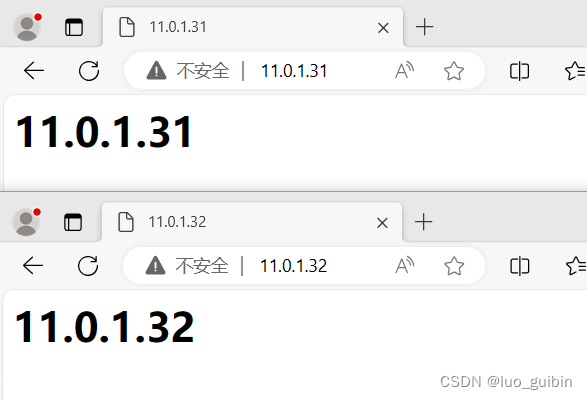

确保真实IP11.0.1.31、11.0.1.32访问正常

二、真实服务器nginx配置

nginx01、nginx02 执行real_server.sh脚本

新建real_server.sh脚本并执行,脚本内容为lo网卡新增两个虚拟接口,添加linux内核路由,关闭arp功能。

[root@nginx01 ~]# cat /etc/keepalived/real_server.sh

ifconfig lo:0 11.0.1.29 netmask 255.255.255.255 broadcast 11.0.1.29

route add -host 11.0.1.29 dev lo:0

ifconfig lo:1 11.0.1.30 netmask 255.255.255.255 broadcast 11.0.1.30

route add -host 11.0.1.30 dev lo:1

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

[root@nginx01 ~]# chmod +x /etc/keepalived/real_server.sh

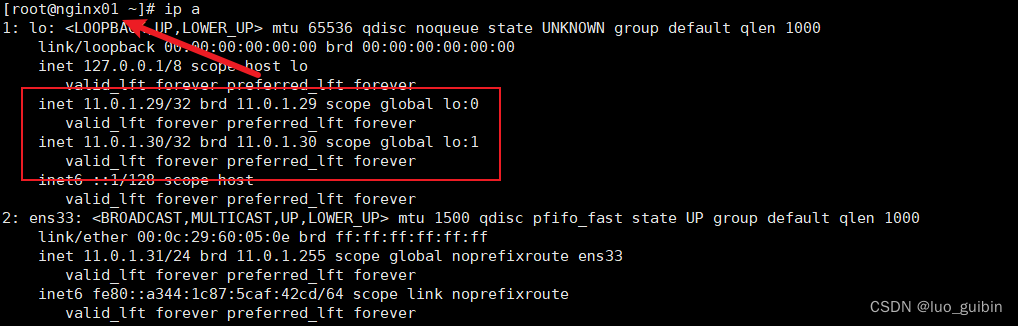

[root@nginx01 ~]# sh /etc/keepalived/real_server.sh查看nginx01网卡信息

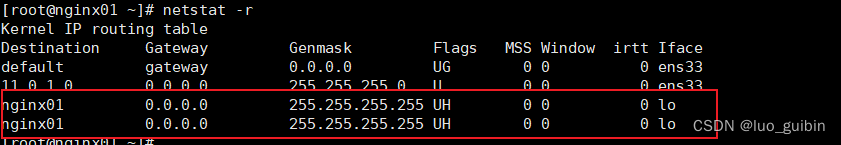

查看nginx02网卡信息

三、lvs的keepalived配置

3.1 配置文件

注意有两个VRRP实例,两个VIP,一个VRRP实例对应一个VIP

| VRRP 1(VIP 11.0.1.29) | 11.0.1.33 master 11.0.1.34 slave |

| VRRP 2(VIP11.0.1.30) | 11.0.1.33 slave 11.0.1.34 master |

lvs01-33

[root@lvs01-33 ~]# cat /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 31

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass abcd

}

virtual_ipaddress {

11.0.1.29

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens33

virtual_router_id 41

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass abcd

}

virtual_ipaddress {

11.0.1.30

}

}

virtual_server 11.0.1.29 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 11.0.1.31 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

nb_get_retry 2

delay_before_retry 1

connect_port 80

}

}

real_server 11.0.1.32 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

nb_get_retry 2

delay_before_retry 1

connect_port 80

}

}

}

virtual_server 11.0.1.30 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 11.0.1.31 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

nb_get_retry 2

delay_before_retry 1

connect_port 80

}

}

real_server 11.0.1.32 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

nb_get_retry 2

delay_before_retry 1

connect_port 80

}

}

}lvs02-34

[root@lvs02-34 ~]# cat /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 31

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass abcd

}

virtual_ipaddress {

11.0.1.29

}

}

vrrp_instance VI_2 {

state MASTER

interface ens33

virtual_router_id 41

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass abcd

}

virtual_ipaddress {

11.0.1.30

}

}

virtual_server 11.0.1.29 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 11.0.1.31 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

nb_get_retry 2

delay_before_retry 1

connect_port 80

}

}

real_server 11.0.1.32 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

nb_get_retry 2

delay_before_retry 1

connect_port 80

}

}

}

virtual_server 11.0.1.30 80 {

delay_loop 6

lb_algo rr

lb_kind DR

protocol TCP

real_server 11.0.1.31 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

nb_get_retry 2

delay_before_retry 1

connect_port 80

}

}

real_server 11.0.1.32 80 {

weight 1

inhibit_on_failure

TCP_CHECK {

connect_timeout 3

nb_get_retry 2

delay_before_retry 1

connect_port 80

}

}

}3.2 开启keepalived服务

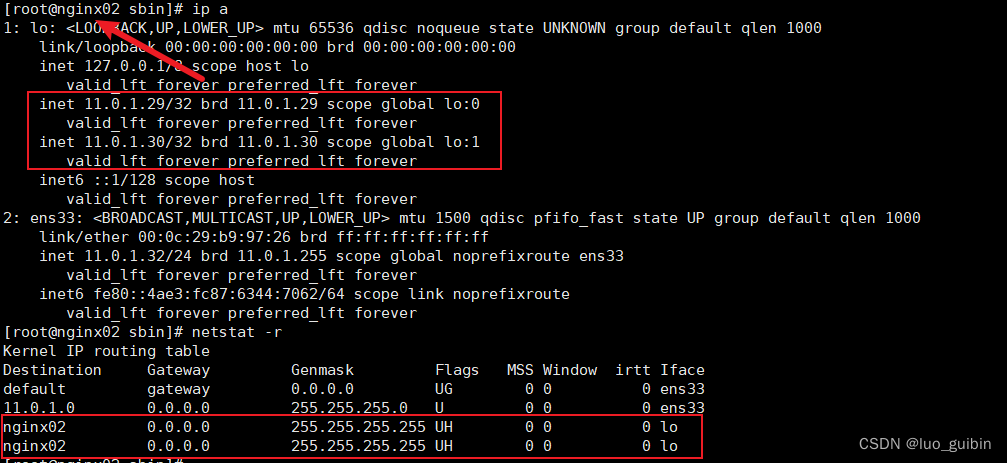

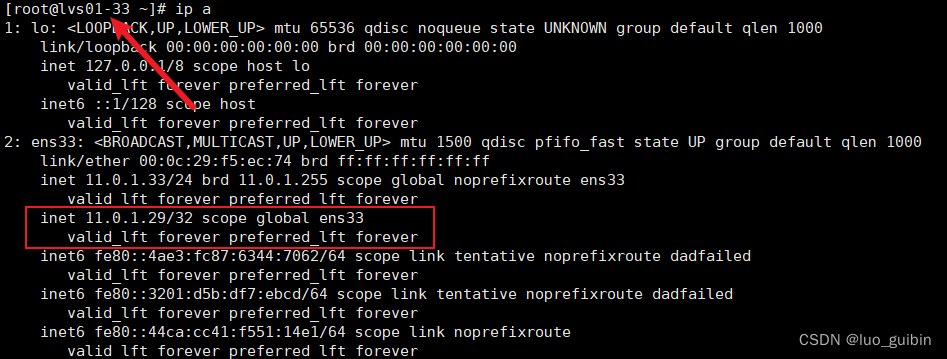

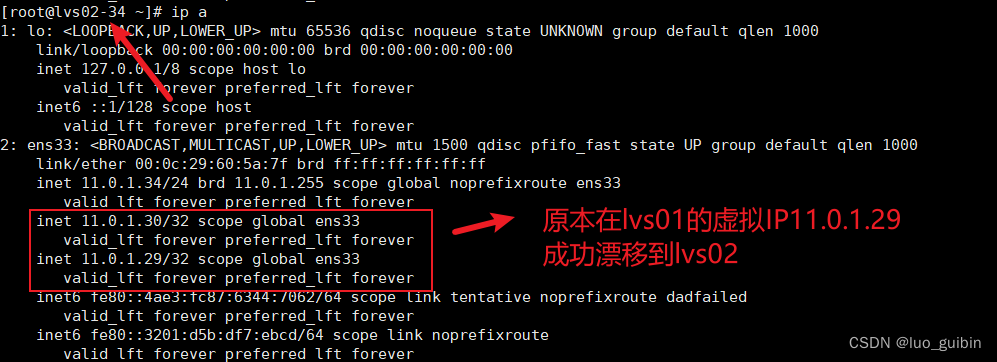

查看lvs01网卡信息

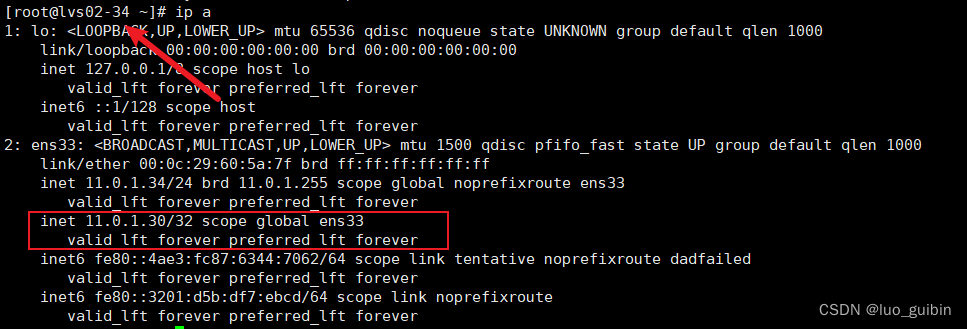

查看lvs02网卡信息

四、测试

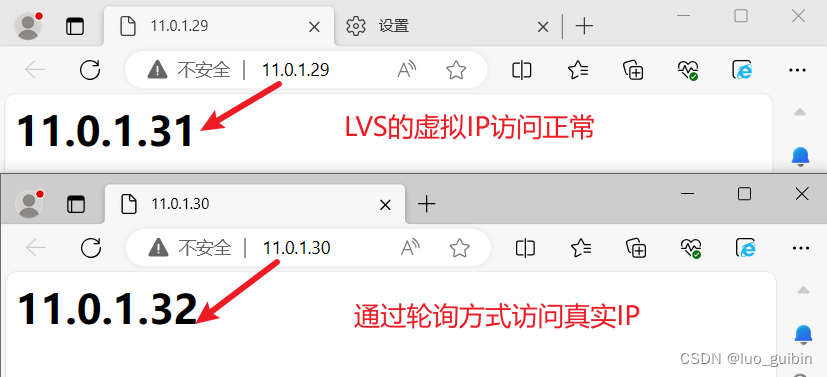

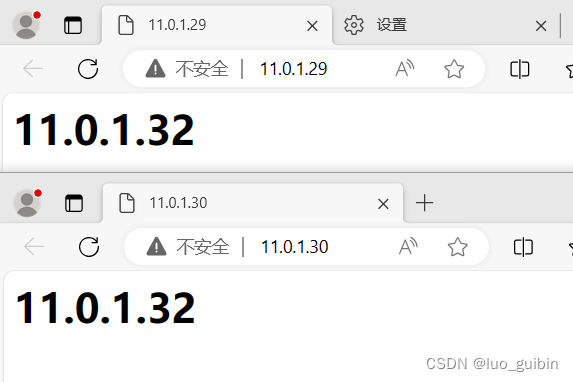

4.1 测试访问VIP

4.2 模拟lvs01宕机

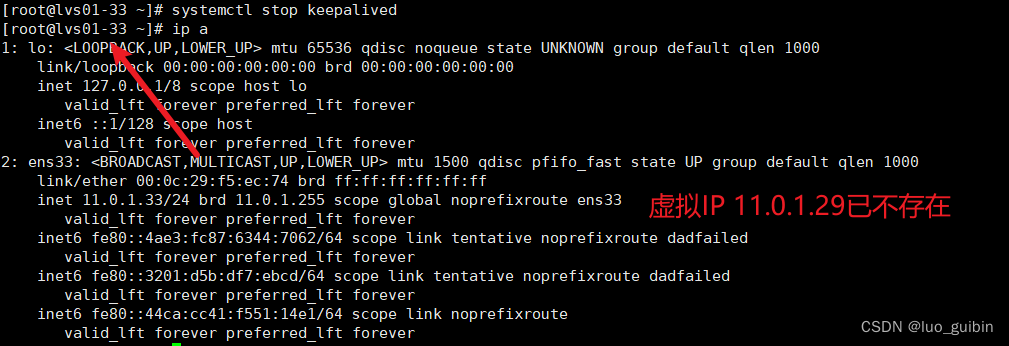

模拟lvs01宕机,关闭lvs1的keepalived

lvs02网卡信息

访问测试,两个虚拟VIP仍然可以正常使用,依旧可以正常访问nginx01、nginx02,,轮询方式仍然正常工作,注意每次刷新前都须有清除cookie记录。

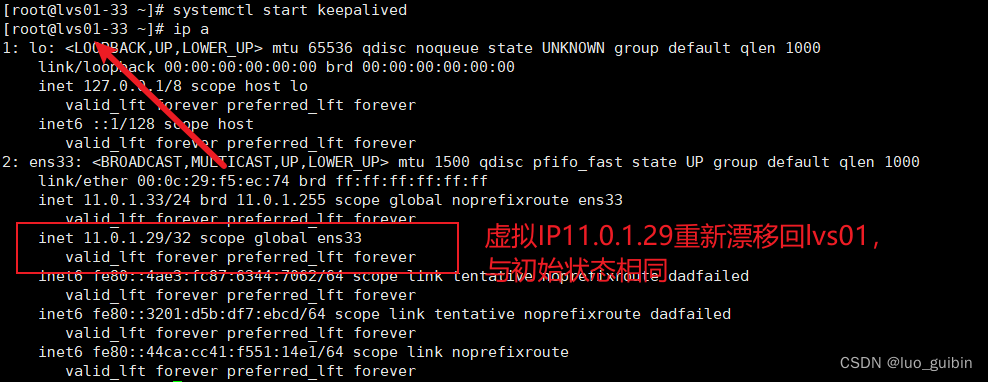

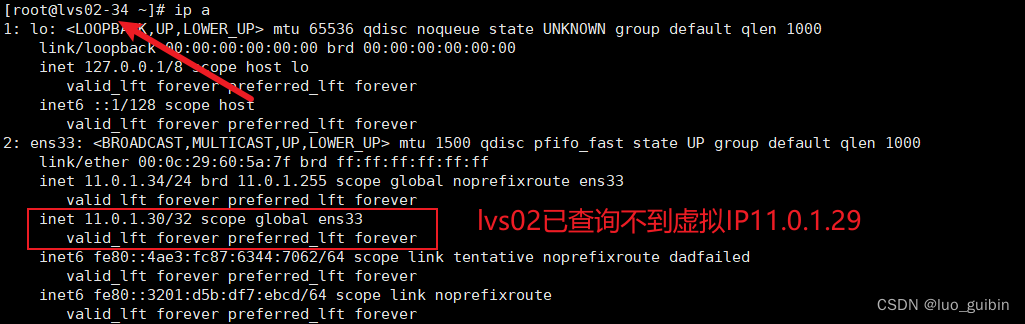

重启lvs01的keepalived,虚拟VIP11.0.1.29重新漂移回lvs01(11.0.1.33)

模拟lvs02宕机同理,不再重复实验。

参考文档:

(2)LVS+Keepalived+nginx高可用负载均衡架构原理及配置_51CTO博客_keepalived nginx负载均衡配置

keepalived+lvs-dr+nginx双主模型-CSDN博客

原文地址:https://blog.csdn.net/weixin_48878440/article/details/135446867

免责声明:本站文章内容转载自网络资源,如本站内容侵犯了原著者的合法权益,可联系本站删除。更多内容请关注自学内容网(zxcms.com)!